TikTok's UK Layoffs Highlight Shift to AI Amid New Safety Regulations

In This Article

HIGHLIGHTS

- TikTok plans to lay off hundreds of UK content moderators, reallocating work to other European offices and third-party providers.

- The company is increasing its reliance on artificial intelligence, which currently removes 85% of rule-breaking content.

- The layoffs coincide with the UK's new Online Safety Act, which mandates stricter content checks and age verification.

- The Communication Workers Union criticizes the move, citing risks to user safety from immature AI systems.

- Despite the layoffs, TikTok's revenue in Europe grew by 38% to $6.3 billion in 2024, while operating losses decreased significantly.

TikTok is set to lay off hundreds of content moderators in the UK as part of a global reorganization aimed at enhancing its Trust and Safety operations. The move comes as the company increasingly relies on artificial intelligence (AI) to manage content moderation, a strategy that has sparked criticism amid new online safety regulations in the UK.

AI Takes Center Stage in Content Moderation

The viral video platform, owned by Chinese tech giant ByteDance, announced that the affected roles would be redistributed to other European offices and third-party providers. This shift is part of a broader strategy to concentrate operations in fewer locations globally, leveraging AI to identify and remove content that violates community guidelines. Currently, over 85% of such content is detected and removed by automated systems.

Regulatory Pressure and Union Concerns

The layoffs occur against the backdrop of the UK's recently enacted Online Safety Act, which imposes stringent requirements on social media companies to verify user ages and monitor harmful content. Non-compliance could result in fines up to 10% of a company's global turnover. Despite these regulations, TikTok has faced criticism for not doing enough to protect users, with the UK data watchdog launching a major investigation earlier this year.

The Communication Workers Union (CWU) has voiced strong opposition to the layoffs, arguing that replacing human moderators with AI could jeopardize user safety. John Chadfield, CWU National Officer for Tech, stated, "TikTok workers have long been sounding the alarm over the real-world costs of cutting human moderation teams in favor of hastily developed, immature AI alternatives."

Financial Growth Amid Operational Changes

Despite the operational changes, TikTok's financial performance in Europe has been robust. Recent filings show a 38% increase in revenue to $6.3 billion in 2024, with operating losses narrowing significantly from $1.4 billion in 2023 to $485 million. This growth underscores the platform's expanding influence even as it navigates regulatory challenges and internal restructuring.

WHAT THIS MIGHT MEAN

As TikTok continues to streamline its operations and invest in AI, the company may face increased scrutiny from regulators concerned about the efficacy of automated systems in safeguarding users. The success of this transition will likely depend on the robustness of AI technologies and their ability to adapt to evolving content moderation needs. Furthermore, the outcome of the UK data watchdog's investigation could set a precedent for how social media platforms are regulated in the future. If TikTok's AI-driven approach proves effective, it could pave the way for similar strategies across the industry, potentially reshaping the landscape of content moderation.

Related Articles

UN Report: Sudan's El Fasher Siege Shows Genocide Hallmarks

British Couple's 10-Year Sentence in Iran Sparks Outcry

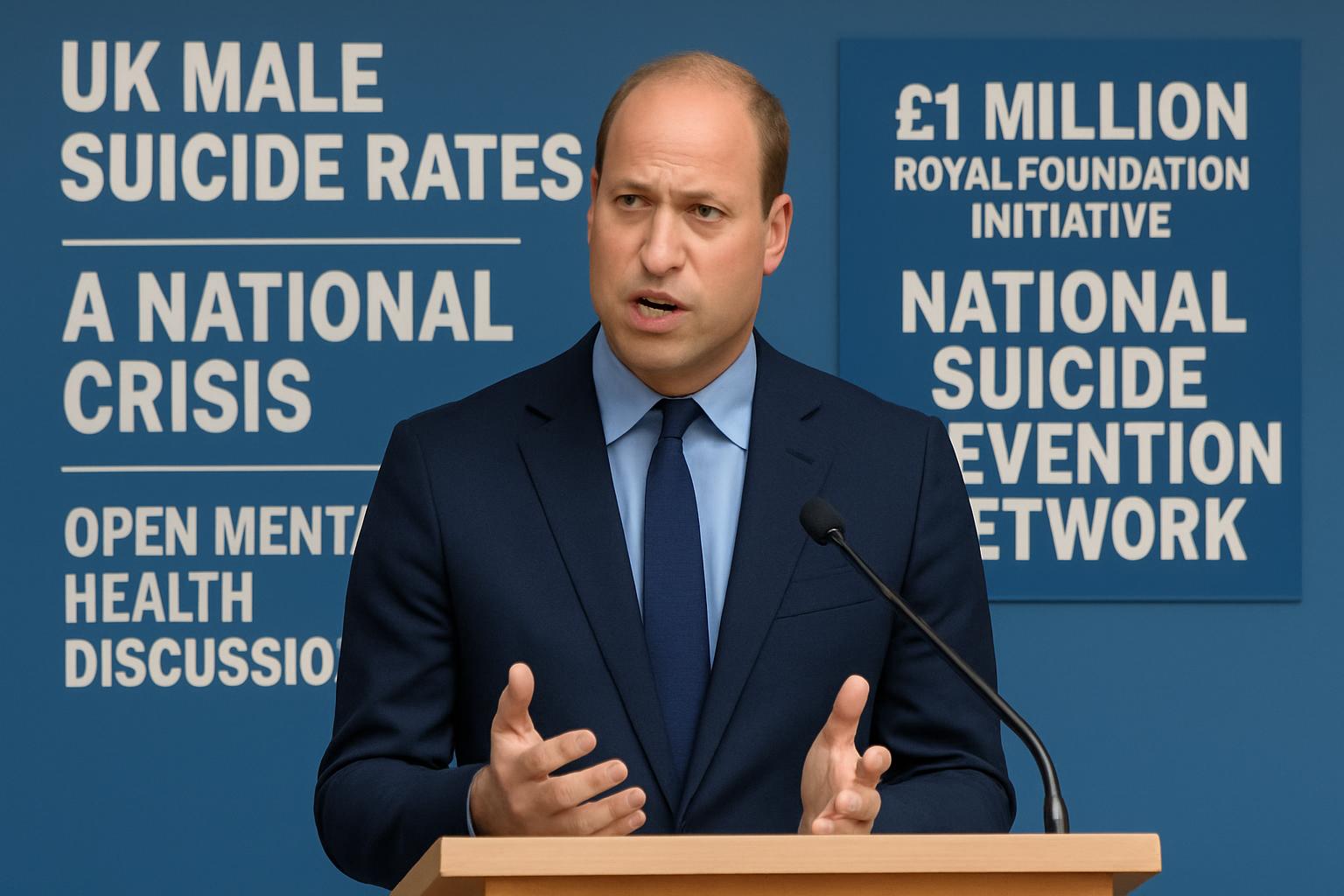

Prince William Calls for Action on UK Male Suicide Rates

UK to Enforce Swift Removal of Non-Consensual Intimate Images by Tech Firms

Reform UK to Reinstate Two-Child Benefit Cap Amidst Political Controversy

TfL Advert Banned for Reinforcing Harmful Racial Stereotypes

TikTok's UK Layoffs Highlight Shift to AI Amid New Safety Regulations

In This Article

Ethan Brooks| Published

Ethan Brooks| Published HIGHLIGHTS

- TikTok plans to lay off hundreds of UK content moderators, reallocating work to other European offices and third-party providers.

- The company is increasing its reliance on artificial intelligence, which currently removes 85% of rule-breaking content.

- The layoffs coincide with the UK's new Online Safety Act, which mandates stricter content checks and age verification.

- The Communication Workers Union criticizes the move, citing risks to user safety from immature AI systems.

- Despite the layoffs, TikTok's revenue in Europe grew by 38% to $6.3 billion in 2024, while operating losses decreased significantly.

TikTok is set to lay off hundreds of content moderators in the UK as part of a global reorganization aimed at enhancing its Trust and Safety operations. The move comes as the company increasingly relies on artificial intelligence (AI) to manage content moderation, a strategy that has sparked criticism amid new online safety regulations in the UK.

AI Takes Center Stage in Content Moderation

The viral video platform, owned by Chinese tech giant ByteDance, announced that the affected roles would be redistributed to other European offices and third-party providers. This shift is part of a broader strategy to concentrate operations in fewer locations globally, leveraging AI to identify and remove content that violates community guidelines. Currently, over 85% of such content is detected and removed by automated systems.

Regulatory Pressure and Union Concerns

The layoffs occur against the backdrop of the UK's recently enacted Online Safety Act, which imposes stringent requirements on social media companies to verify user ages and monitor harmful content. Non-compliance could result in fines up to 10% of a company's global turnover. Despite these regulations, TikTok has faced criticism for not doing enough to protect users, with the UK data watchdog launching a major investigation earlier this year.

The Communication Workers Union (CWU) has voiced strong opposition to the layoffs, arguing that replacing human moderators with AI could jeopardize user safety. John Chadfield, CWU National Officer for Tech, stated, "TikTok workers have long been sounding the alarm over the real-world costs of cutting human moderation teams in favor of hastily developed, immature AI alternatives."

Financial Growth Amid Operational Changes

Despite the operational changes, TikTok's financial performance in Europe has been robust. Recent filings show a 38% increase in revenue to $6.3 billion in 2024, with operating losses narrowing significantly from $1.4 billion in 2023 to $485 million. This growth underscores the platform's expanding influence even as it navigates regulatory challenges and internal restructuring.

WHAT THIS MIGHT MEAN

As TikTok continues to streamline its operations and invest in AI, the company may face increased scrutiny from regulators concerned about the efficacy of automated systems in safeguarding users. The success of this transition will likely depend on the robustness of AI technologies and their ability to adapt to evolving content moderation needs. Furthermore, the outcome of the UK data watchdog's investigation could set a precedent for how social media platforms are regulated in the future. If TikTok's AI-driven approach proves effective, it could pave the way for similar strategies across the industry, potentially reshaping the landscape of content moderation.

Related Articles

UN Report: Sudan's El Fasher Siege Shows Genocide Hallmarks

British Couple's 10-Year Sentence in Iran Sparks Outcry

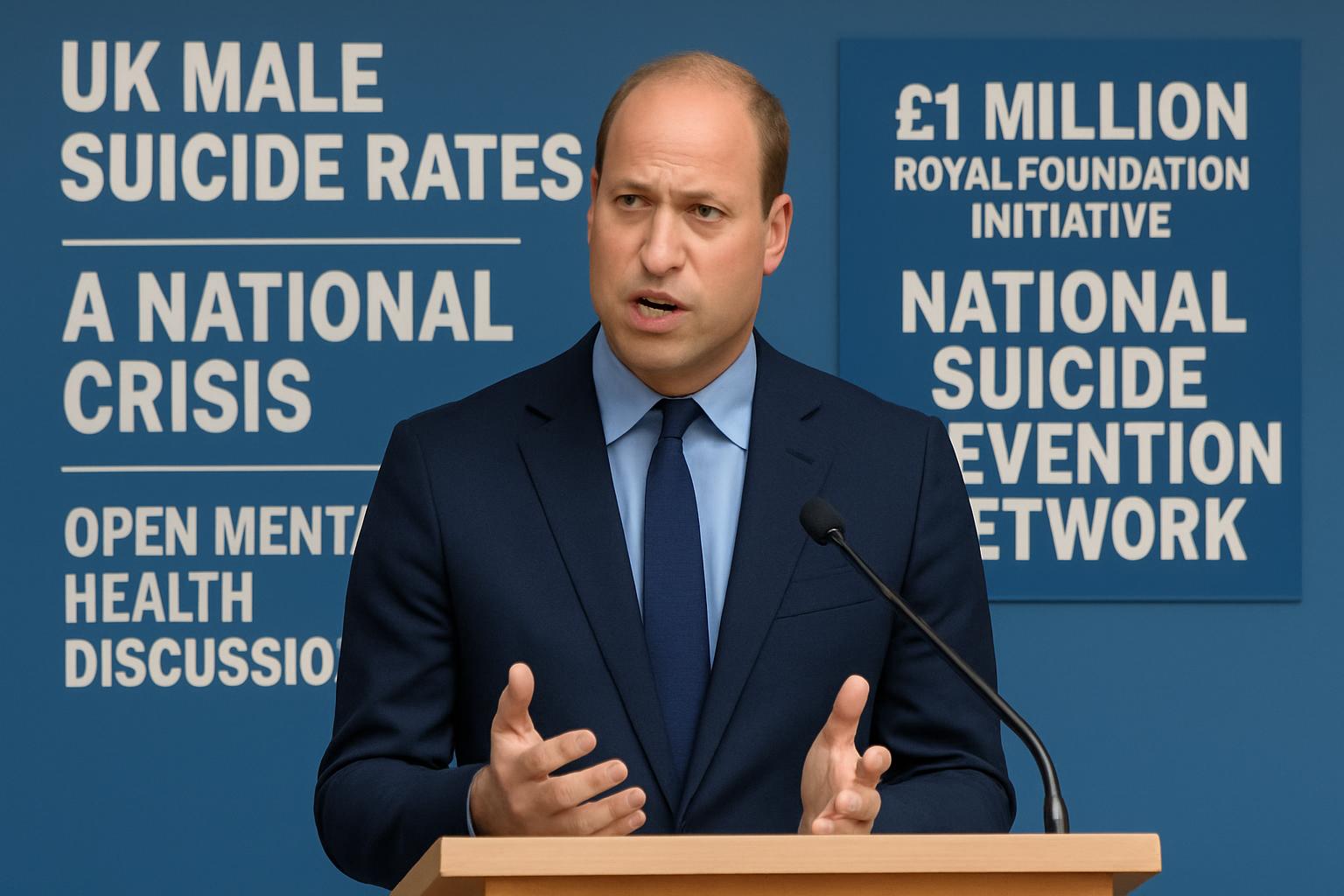

Prince William Calls for Action on UK Male Suicide Rates

UK to Enforce Swift Removal of Non-Consensual Intimate Images by Tech Firms

Reform UK to Reinstate Two-Child Benefit Cap Amidst Political Controversy

TfL Advert Banned for Reinforcing Harmful Racial Stereotypes