Facial Recognition Technology Sparks Debate Over Privacy and Bias

Published 9 December 2025

Highlights

- Merseyside Police have implemented Live Facial Recognition (LFR) technology to identify wanted individuals, sparking privacy concerns.

- Civil rights group Liberty has criticized the technology's rollout, citing the inclusion of children on watchlists and potential biases.

- A Home Office review revealed that facial recognition systems misidentify women and ethnic minorities at higher rates.

- Police forces lobbied to use a biased system, arguing that a higher confidence threshold reduced operational effectiveness.

- The National Police Chiefs’ Council (NPCC) acknowledged the bias but reversed measures to mitigate it, prioritizing investigative leads.

-

Rewritten Article

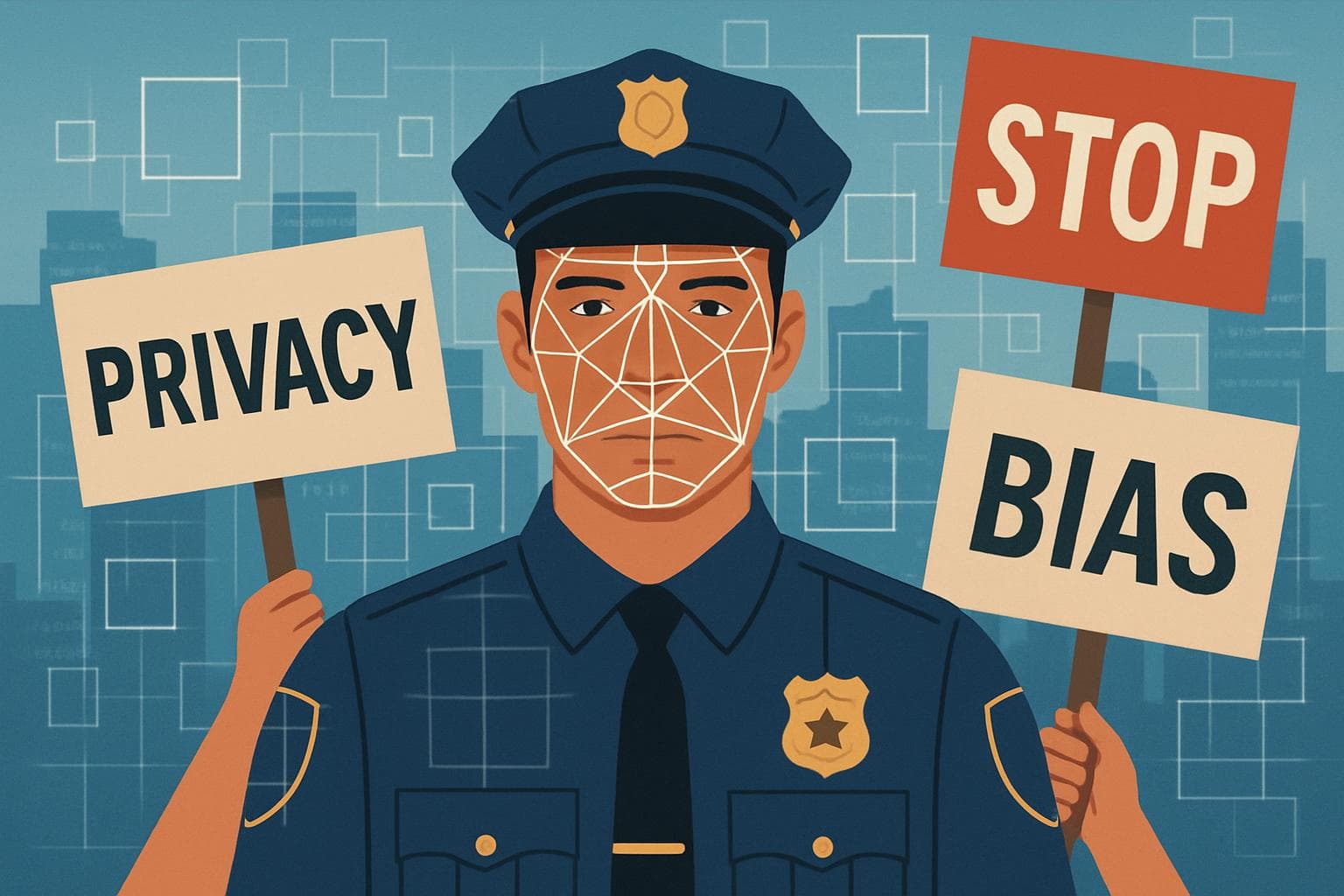

Facial Recognition Technology Sparks Debate Over Privacy and Bias

The deployment of Live Facial Recognition (LFR) technology by Merseyside Police has reignited debates over privacy and potential biases in law enforcement surveillance. This advanced technology, capable of identifying individuals from live camera feeds, aims to assist officers in making informed decisions about stopping and questioning suspects. However, its implementation has raised significant concerns among civil rights advocates.

Privacy and Civil Rights Concerns

Merseyside Police have assured the public that LFR is not a tool for mass surveillance. Assistant Chief Constable Jennifer Wilson emphasized that only individuals on a pre-determined watchlist would be identified, and no biometric data would be stored from those not on the list. Despite these assurances, civil rights group Liberty has called for a halt to the technology's rollout, citing the inclusion of children on police watchlists as a major concern. Liberty's director, Akiko Hart, expressed disappointment over the government's decision to expand the use of facial recognition without adequate safeguards.

Bias in Facial Recognition Systems

A recent review by the National Physical Laboratory (NPL) commissioned by the Home Office revealed significant biases in facial recognition systems. The technology was found to misidentify Black and Asian individuals and women at higher rates than white men. Despite these findings, police forces successfully lobbied to use a biased system, arguing that a higher confidence threshold reduced the number of potential matches and, consequently, investigative leads.

Operational Challenges and Ethical Implications

The National Police Chiefs’ Council (NPCC) initially increased the confidence threshold to mitigate bias, but this decision was reversed after complaints from police forces. The NPCC documents highlighted that while the higher threshold reduced bias, it also significantly impacted operational effectiveness, with potential matches dropping from 56% to 14%. The Home Office acknowledged the bias, stating that the algorithm could produce false positives for Black women almost 100 times more frequently than for white women under certain settings.

-

Scenario Analysis

The ongoing deployment of facial recognition technology in the UK raises critical questions about balancing law enforcement needs with civil liberties. As the Home Office continues to expand the use of this technology, it faces mounting pressure to address privacy concerns and inherent biases. Legal challenges and public scrutiny may prompt the government to implement stricter regulations and transparency measures. Experts suggest that without significant reforms, the technology could exacerbate existing inequalities and erode public trust in law enforcement. The future of facial recognition in policing will likely depend on the government's ability to reconcile these complex issues.

The deployment of Live Facial Recognition (LFR) technology by Merseyside Police has reignited debates over privacy and potential biases in law enforcement surveillance. This advanced technology, capable of identifying individuals from live camera feeds, aims to assist officers in making informed decisions about stopping and questioning suspects. However, its implementation has raised significant concerns among civil rights advocates.

Privacy and Civil Rights Concerns

Merseyside Police have assured the public that LFR is not a tool for mass surveillance. Assistant Chief Constable Jennifer Wilson emphasized that only individuals on a pre-determined watchlist would be identified, and no biometric data would be stored from those not on the list. Despite these assurances, civil rights group Liberty has called for a halt to the technology's rollout, citing the inclusion of children on police watchlists as a major concern. Liberty's director, Akiko Hart, expressed disappointment over the government's decision to expand the use of facial recognition without adequate safeguards.

Bias in Facial Recognition Systems

A recent review by the National Physical Laboratory (NPL) commissioned by the Home Office revealed significant biases in facial recognition systems. The technology was found to misidentify Black and Asian individuals and women at higher rates than white men. Despite these findings, police forces successfully lobbied to use a biased system, arguing that a higher confidence threshold reduced the number of potential matches and, consequently, investigative leads.

Operational Challenges and Ethical Implications

The National Police Chiefs’ Council (NPCC) initially increased the confidence threshold to mitigate bias, but this decision was reversed after complaints from police forces. The NPCC documents highlighted that while the higher threshold reduced bias, it also significantly impacted operational effectiveness, with potential matches dropping from 56% to 14%. The Home Office acknowledged the bias, stating that the algorithm could produce false positives for Black women almost 100 times more frequently than for white women under certain settings.

What this might mean

The ongoing deployment of facial recognition technology in the UK raises critical questions about balancing law enforcement needs with civil liberties. As the Home Office continues to expand the use of this technology, it faces mounting pressure to address privacy concerns and inherent biases. Legal challenges and public scrutiny may prompt the government to implement stricter regulations and transparency measures. Experts suggest that without significant reforms, the technology could exacerbate existing inequalities and erode public trust in law enforcement. The future of facial recognition in policing will likely depend on the government's ability to reconcile these complex issues.