UK Strengthens AI Regulations to Combat Child Sexual Abuse Imagery

In This Article

HIGHLIGHTS

- The UK government is amending the Crime and Policing Bill to allow proactive testing of AI tools for generating child sexual abuse material (CSAM).

- Reports of AI-generated CSAM have more than doubled from 199 in 2024 to 426 in 2025, according to the Internet Watch Foundation.

- The new law empowers tech firms and child safety charities to test AI models for safety before release, aiming to prevent abuse at the source.

- The legislation also includes a ban on possessing, creating, or distributing AI tools designed to generate CSAM, with penalties of up to five years in prison.

- Child safety advocates stress the need for mandatory testing to ensure AI models are safe and do not contribute to online abuse.

In a significant move to bolster child safety online, the UK government has announced amendments to the Crime and Policing Bill, allowing for the proactive testing of artificial intelligence tools to ensure they do not generate child sexual abuse material (CSAM). This legislative change comes amid a worrying rise in AI-generated CSAM reports, which have more than doubled over the past year, according to the Internet Watch Foundation (IWF).

Proactive Testing for AI Tools

Under the new provisions, designated tech companies and child protection agencies will be authorized to examine AI models, such as those used in chatbots and image generators, to ensure they have robust safeguards against creating illegal imagery. Technology Secretary Liz Kendall emphasized that these measures are crucial for making AI systems safe from the outset. "By empowering trusted organizations to scrutinize their AI, we can stop abuse before it happens," she stated.

Rising Concerns and Legal Measures

The IWF reported a significant increase in AI-related CSAM, with 426 pieces of material removed between January and October 2025, up from 199 in the same period in 2024. The charity's chief executive, Kerry Smith, welcomed the government's proposals, highlighting the potential of AI tools to victimize survivors repeatedly. The law also introduces a ban on possessing, creating, or distributing AI tools designed to generate CSAM, with offenders facing up to five years in prison.

Calls for Mandatory Safeguards

While the new measures have been broadly welcomed, child safety advocates like Rani Govender from the NSPCC argue that testing should be mandatory to ensure comprehensive protection. "To make a real difference for children, this cannot be optional," Govender insisted, urging the government to enforce a mandatory duty for AI developers to integrate child safety into product design.

Addressing the Growing Threat

The rise in AI-generated abuse imagery poses a significant challenge for law enforcement and child protection agencies. The IWF and other organizations have warned that the sophistication of AI-generated content makes it difficult to distinguish between real and fabricated images, complicating efforts to police such material. The new legislation aims to address these challenges by equipping developers and charities with the tools needed to safeguard against extreme pornography and non-consensual intimate images.

WHAT THIS MIGHT MEAN

The UK government's proactive approach to regulating AI tools marks a critical step in combating the proliferation of child sexual abuse imagery online. By allowing for pre-release testing, the legislation aims to prevent the creation of harmful content at its source. However, the effectiveness of these measures will depend on their implementation and the willingness of tech companies to comply with the new regulations.

Experts suggest that mandatory testing could significantly enhance child safety, but it requires robust enforcement mechanisms to ensure compliance. As AI technology continues to evolve, ongoing collaboration between the government, tech firms, and child protection agencies will be essential to adapt to emerging threats and protect vulnerable populations.

Looking ahead, the success of these initiatives could serve as a model for other countries grappling with similar challenges, potentially leading to international cooperation in regulating AI-generated content.

Related Articles

UK to Enforce Swift Removal of Non-Consensual Intimate Images by Tech Firms

UK Clinical Trial on Puberty Blockers Paused Amid Safety Concerns

US Supreme Court Ruling on Tariffs Sparks Uncertainty for UK and Global Trade

UK Government Eases Deer Culling to Protect Woodlands and Farmland

UN Report: Sudan's El Fasher Siege Shows Genocide Hallmarks

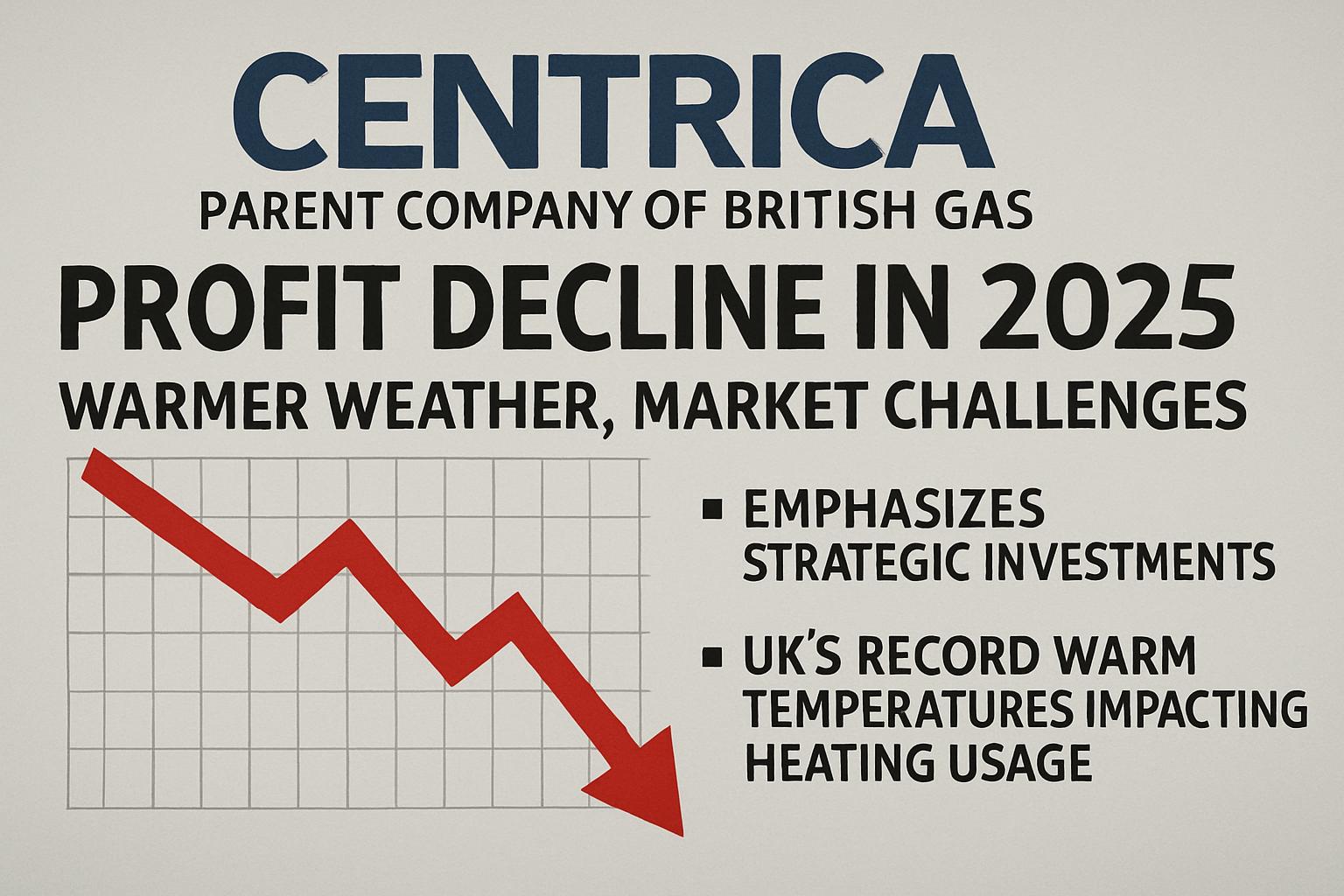

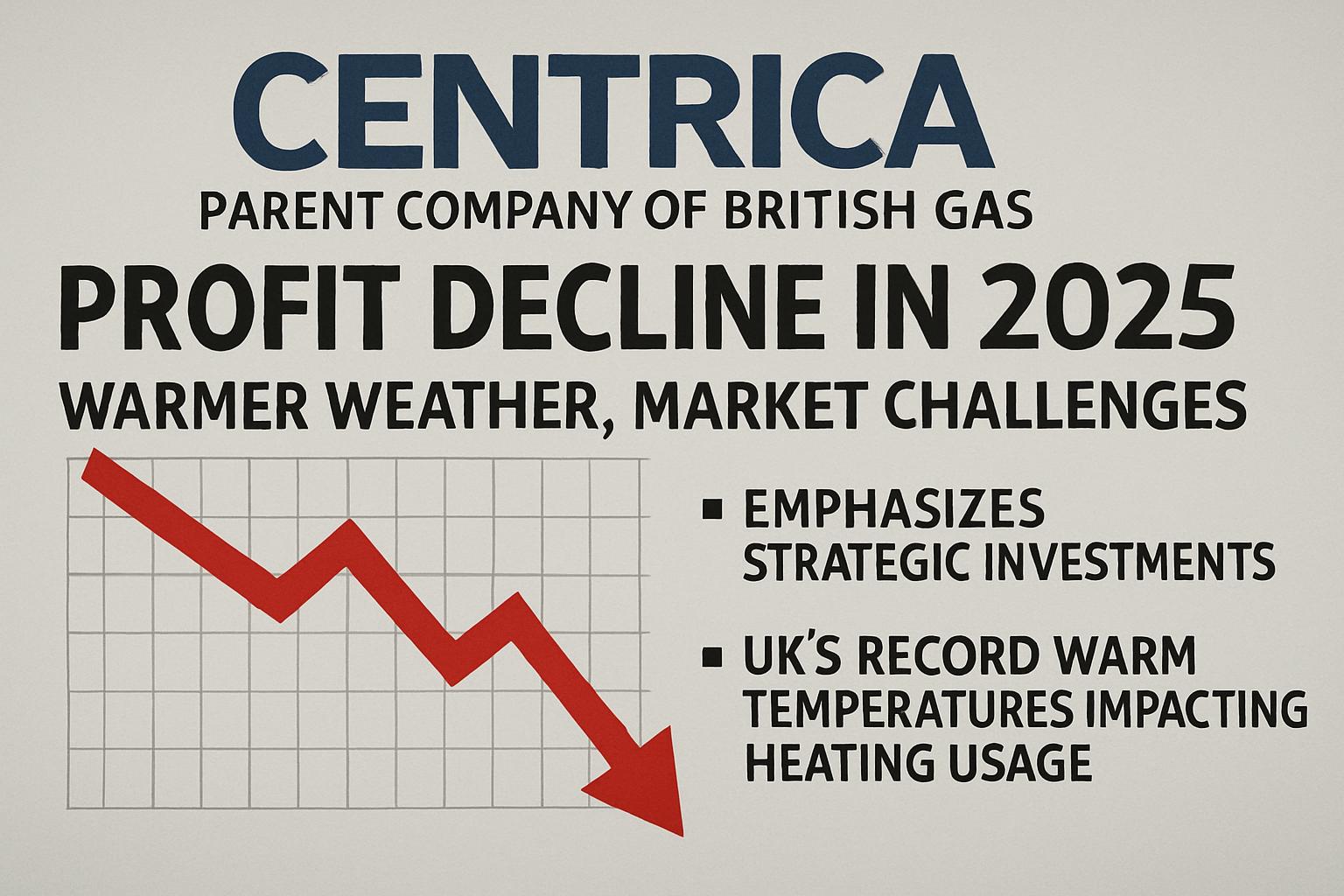

Centrica Faces Profit Decline Amid Warmer Weather and Market Challenges

UK Strengthens AI Regulations to Combat Child Sexual Abuse Imagery

In This Article

Himanshu Kaushik| Published

Himanshu Kaushik| Published HIGHLIGHTS

- The UK government is amending the Crime and Policing Bill to allow proactive testing of AI tools for generating child sexual abuse material (CSAM).

- Reports of AI-generated CSAM have more than doubled from 199 in 2024 to 426 in 2025, according to the Internet Watch Foundation.

- The new law empowers tech firms and child safety charities to test AI models for safety before release, aiming to prevent abuse at the source.

- The legislation also includes a ban on possessing, creating, or distributing AI tools designed to generate CSAM, with penalties of up to five years in prison.

- Child safety advocates stress the need for mandatory testing to ensure AI models are safe and do not contribute to online abuse.

In a significant move to bolster child safety online, the UK government has announced amendments to the Crime and Policing Bill, allowing for the proactive testing of artificial intelligence tools to ensure they do not generate child sexual abuse material (CSAM). This legislative change comes amid a worrying rise in AI-generated CSAM reports, which have more than doubled over the past year, according to the Internet Watch Foundation (IWF).

Proactive Testing for AI Tools

Under the new provisions, designated tech companies and child protection agencies will be authorized to examine AI models, such as those used in chatbots and image generators, to ensure they have robust safeguards against creating illegal imagery. Technology Secretary Liz Kendall emphasized that these measures are crucial for making AI systems safe from the outset. "By empowering trusted organizations to scrutinize their AI, we can stop abuse before it happens," she stated.

Rising Concerns and Legal Measures

The IWF reported a significant increase in AI-related CSAM, with 426 pieces of material removed between January and October 2025, up from 199 in the same period in 2024. The charity's chief executive, Kerry Smith, welcomed the government's proposals, highlighting the potential of AI tools to victimize survivors repeatedly. The law also introduces a ban on possessing, creating, or distributing AI tools designed to generate CSAM, with offenders facing up to five years in prison.

Calls for Mandatory Safeguards

While the new measures have been broadly welcomed, child safety advocates like Rani Govender from the NSPCC argue that testing should be mandatory to ensure comprehensive protection. "To make a real difference for children, this cannot be optional," Govender insisted, urging the government to enforce a mandatory duty for AI developers to integrate child safety into product design.

Addressing the Growing Threat

The rise in AI-generated abuse imagery poses a significant challenge for law enforcement and child protection agencies. The IWF and other organizations have warned that the sophistication of AI-generated content makes it difficult to distinguish between real and fabricated images, complicating efforts to police such material. The new legislation aims to address these challenges by equipping developers and charities with the tools needed to safeguard against extreme pornography and non-consensual intimate images.

WHAT THIS MIGHT MEAN

The UK government's proactive approach to regulating AI tools marks a critical step in combating the proliferation of child sexual abuse imagery online. By allowing for pre-release testing, the legislation aims to prevent the creation of harmful content at its source. However, the effectiveness of these measures will depend on their implementation and the willingness of tech companies to comply with the new regulations.

Experts suggest that mandatory testing could significantly enhance child safety, but it requires robust enforcement mechanisms to ensure compliance. As AI technology continues to evolve, ongoing collaboration between the government, tech firms, and child protection agencies will be essential to adapt to emerging threats and protect vulnerable populations.

Looking ahead, the success of these initiatives could serve as a model for other countries grappling with similar challenges, potentially leading to international cooperation in regulating AI-generated content.

Related Articles

UK to Enforce Swift Removal of Non-Consensual Intimate Images by Tech Firms

UK Clinical Trial on Puberty Blockers Paused Amid Safety Concerns

US Supreme Court Ruling on Tariffs Sparks Uncertainty for UK and Global Trade

UK Government Eases Deer Culling to Protect Woodlands and Farmland

UN Report: Sudan's El Fasher Siege Shows Genocide Hallmarks

Centrica Faces Profit Decline Amid Warmer Weather and Market Challenges